Yesterday the team announced the availability of a limited beta on this new functionality. They sent me a trial code, so I decided to give it a whirl. Here’s what I found…

The team also announced some of their future plans here.

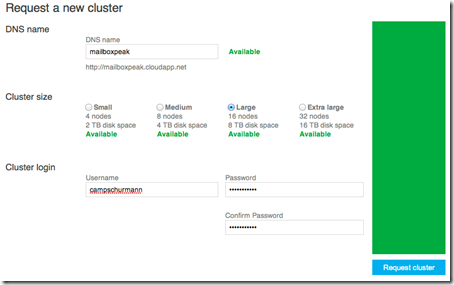

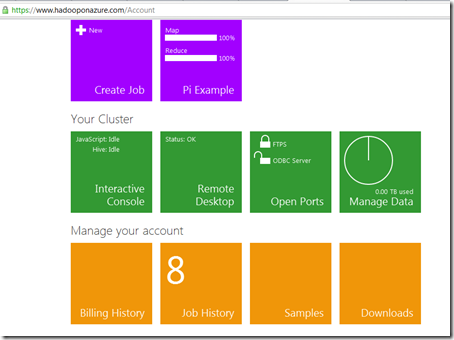

To start at the portal, you sign in with a WLID and enter your beta code, then you enter a username and password. The portal is simple to use (Metro-style buttons). You then request that a cluster be allocated. I’ll show their screen shot (for security reasons), rather than mine.

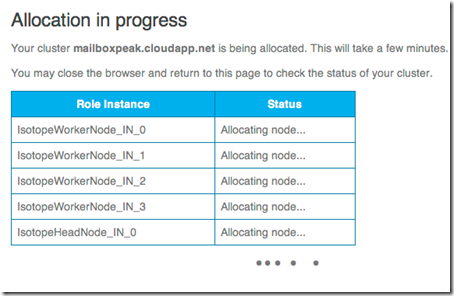

After you click ‘Request cluster’, then a status screen, similar to the one below, shows up. It took about 5 minutes for the cluster allocation to complete for me.

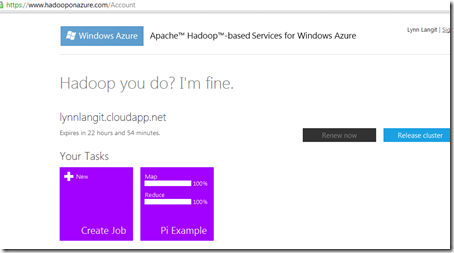

Then you are ready to get started trying it out. My cluster is shown below in a couple of screen shots. The first two options are for you to create (and run) a MapReduce job and also to see the status of the most recently run jobs.

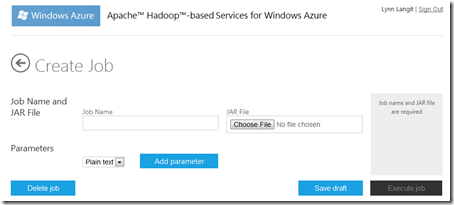

If you click ‘Create Job’ then you’ll see the screen shown below. There you can run a named job by selecting a *.jar file to run the MapReduce job.

Back on the first page, the complete page is shown below. You can see that the green buttons allow you work with your cluster in the following ways:

1) You can run queries, either in JavaScript or in Hive directly in an interactive console. (I’ll show a screenshot later in this post).

2) Next you can download a *.rdp file so that you can access your cluster via remote desktop. I tested that out and it worked just fine.

3) The third button allows you to open ports. Nothing is open by default. So that I could connect via my local Excel instance, I opened port 10000 for ODBC by clicking on this button and dragging the slider from closed to open.

4) The forth button takes you to some options for importing data, again I’ll show that below.

On the next (orange) row, the buttons work as follows:

1) The first button informs you that the trial is FREE

2) The second button shows you the history of the jobs that you have run.

3) The third button points you to a sample to start with. I’d suggest that you start there.

4) The forth button just links you to documentation.

Trying out the Sample

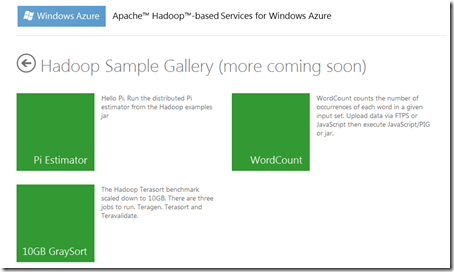

As mentioned, click the third orange button on the main console, then you’ll see the samples shown below.

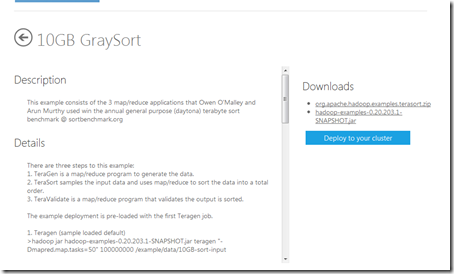

I already tried out the Pi Estimator, which worked fine, yesterday. So, I’ll try out the ‘10GB Gray Sort’ today. I clicked that button and saw the screen below.

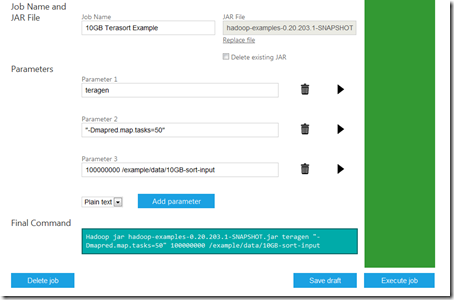

Next I clicked ‘Deploy to your cluster’ and was taken to the screen shown below.

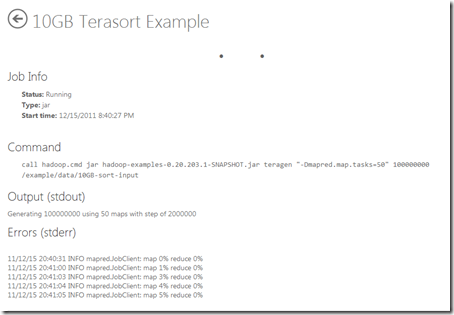

Note the parameters and the ‘Final Command’ on the screen above. Next I clicked on ‘Execute Job’ and then saw the screen below. Then the screen converted to a status screen that refreshed itself about once per second. This screen is shown below. This job took 12 minutes to complete.

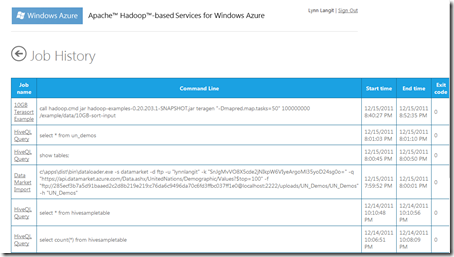

Here’s the job history for this and some other jobs that I ran.

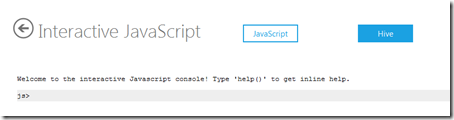

Now let’s look at the interactive console. It defaults to using JavaScript, this is shown below. I am going to switch to the ‘Hive’ console by clicking that button.

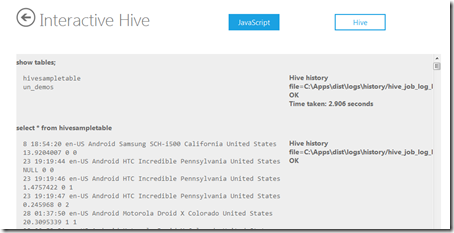

Here’s a couple of simple Hive queries to get us started (shown below):

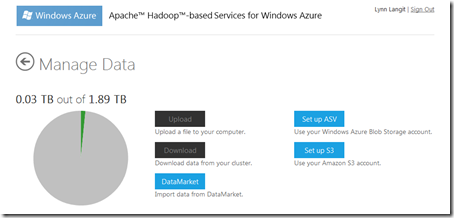

Next, if go back to main console and then click on ‘Manage Data’ you’ll see the screen below:

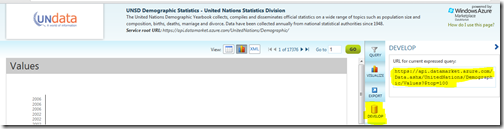

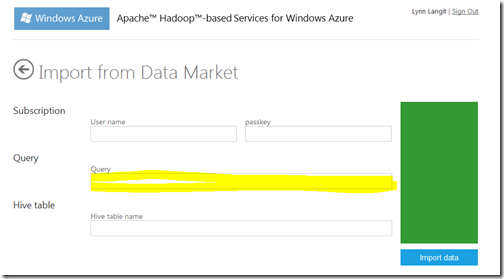

You’ll see that in this beta, you can work with data from the Windows Azure Data market, Windows Azure Blob storage and Amazon S3. I tried out importing data from the Windows Azure Data market and it worked just fine. Shown below is a the query I generated (from some ‘free’ data) from the Data market. Notice that I clicked on ‘Develop’ on the right side of the page to generate the query (highlighted) that I pasted into the dialog box on the Hadoop page.

The blank import page in the Hadoop portal is shown below, you paste the query in the highlighted box, enter your credentials and give the new table a name, then click the ‘Import Data’ button.

At this time, although you can enter the credentials for your Windows Azure storage account, I did not see a way in the Hadoop portal to actually do the import. Also the documentation (linked) section is empty. The same appears to be the case for AWS S3. Also the ‘upload’ and ‘download’ buttons on the ‘Manage Data’ page do not yet appear to be available in this beta to try out.

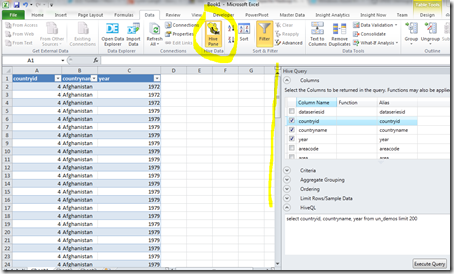

Connect to Excel

The last thing I tried out was the connectivity to Excel. I followed the instructions in the documentation linked on this portal and tried to connect to my newly uploaded table (from Windows Azure Data Market) of UN_samples and it worked great! Note the ‘Hive’ button and task pane on the right.

Conclusion

I still have more to try out here. I am reading and learning about MapReduce and Hive and want to put this beta through a few more paces in the weeks to come. I am interested in hearing from you as well. Are you working with Hadoop yet? How’s it going?

I’ve been working with Hadoop lately and I noticed what other vendors are developing in the Big Data stack like IBM , QlikTech , SAP etc… I can clearly state that Microsoft is doing a great job regarding Big Data with its upcoming release of SQL Server 2012. Also, I liked the user friendly experience of this BETA. I would appreciate if you let me know how could I get a trial code as well!

Many Thanks!

Thanks for detailed article on Hadoop. hadoop can also be used olap and oltp processing.

Please click Why Hadoop is introduced to know more on Basics of Hadoop

We were not able to try on Hadoop ……since it is only on invitation basis ..

can you send me the invite

Gosh! Ive been waiting forever them to send the code I requested. Any suggestions Lynn?

sorry about that – I’ll ask again when they plan to open up the beta to more users.